Organizations today rely heavily on rapid, dependable data flows to support critical decisions in finance, e-commerce, healthcare, and beyond. Conventional ETL (Extract, Transform, Load) methods provide a foundation but can become inflexible as data sources grow in number and complexity. AI-powered ETL services augment traditional practices by using machine learning to adapt swiftly, detect anomalies automatically, and streamline data processing at scale. Below is a deeper look at the challenges and solutions that decision-makers face when embracing AI-driven data pipelines.

Growing demands on ETL services

Enterprises and organizations ingest far more data than ever before, often from multiple formats and protocols. This increasing variety and velocity means that manual or rules-based pipelines can fail to detect unusual records, outdated schemas, or shifts in usage. AI approaches address these limitations by continuously learning from data and refining the actions required to process it. An AI ETL solution excels at:

Anomaly detection: Statistical and machine learning models flag suspicious or inconsistent data as soon as it appears, drastically lowering the risk of bad inputs entering downstream systems.

Adaptive transformations: Rather than updating transformations by hand, teams can rely on models that adjust to subtle format changes or newly introduced fields.

Smarter compliance: AI can bridge legacy systems and obsolete data formats with new platforms adhering to new data standards, simplifying data compliance processes (which is handy, esp. in a regulatory environment such as banking, healthcare, or insurance).

Architectural considerations

When updating legacy ETL or constructing new pipelines, architects often blend established frameworks (Apache Airflow, Kafka) with AI toolkits that handle batch and real-time data. Many organizations adopt a layered approach:

Data ingestion: AI classifies incoming streams, assigns metadata, and checks for glaring inconsistencies. Whether pulling from on-premise databases or cloud APIs, this stage ensures uniform handling of heterogeneous sources.

Processing cluster: GPU-enabled or distributed CPU nodes run AI inference for data cleaning, anomaly detection, or pattern recognition. Tasks such as feature engineering and reinforcement learning may occur here.

Target system: Cleaned and validated data is stored in a warehouse, data lake, or specialized analytics platform. Role-based policies control who can access sensitive information, maintaining compliance with laws like PSD2 or GDPR.

Securing high-stakes data

Financial services, healthcare, and similar sectors have stringent rules around client data. AI ETL frameworks must align with these requirements without sacrificing performance:

Encryption and key management: Protect data at rest (e.g., AES-256) and in transit (TLS). Hardware Security Modules often store keys securely.

Compliance-driven logging: Detailed logs capture when data enters, how transformations happen, and who or what initiated each action. In regulated sectors, this trail can be vital for audits or conflict resolution.

Zero trust architecture: Grant minimal privileges and compartmentalize each step of the pipeline. If one part is compromised, attackers cannot freely move to other segments.

Balancing performance and cost

Introducing AI can yield significant operational improvements but also increase computational loads. Decision-makers typically weigh throughput, latency, and budget when planning an AI ETL deployment. Potential tactics include:

Autoscaling: Cloud resources adjust in real time, ensuring that cost does not spiral during occasional peaks.

Model compression: Methods like model pruning or distillation can reduce the overhead of running inference without substantially lowering detection accuracy.

Streamlined orchestration: Break tasks into micro-batches or fine-grained events, triggering immediate processing as data arrives rather than relying on scheduled bulk jobs.

Future directions and benefits

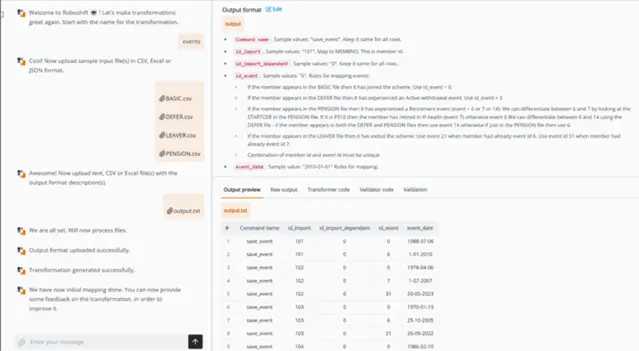

AI ETL is evolving rapidly, incorporating strategies like unsupervised clustering, refined anomaly detection, and deeper modeling to spot patterns in dynamic datasets. Regulatory requirements increasingly emphasize robust data lineage and resilient design principles, which means organizations need AI-driven tools to maintain accuracy and compliance at scale. When leaders invest in advanced ETL services—like those offered by Blocshop—they can manage growing data volumes while preserving data quality and security. Blocshop’s own AI-based tool, Roboshift, accelerates data processes by a factor of ten and reduces operational costs, creating a more efficient, cost-effective environment.

Take the next step with a free consultation from Blocshop

If you’re ready to optimize your data pipelines, arrange a complimentary session with Blocshop to learn how an AI-focused ETL approach can eliminate bottlenecks, meet regulatory needs, and deliver stronger performance for your enterprise.